1. Introduction

As large language models (LLMs) are increasingly deployed for chatbots, personal assistants, and translators, it is crucial to have an efficient inference-serving system capable of handling billions of daily requests. However, language models with enormous parameters require substantial compute and memory resources. Furthermore, scheduling requests is essential to achieve high throughput and low latency in an industry-scale multi-node deployment. This survey classifies recent research trends into three categories and focuses on five papers.

KV Cache Management & Memory Optimization. KV Cache is the key-value pairs that were generated from previous prompt, and subsequently being reused in future token generation to speed up inference performance at the cost of memory usage. Recent advancements focus on continuous virtual memory blocks for attention and on sharing the KV cache for similar prompts.

Prefill-Decode Scheduling and Placement Strategy. Prefill and Decode are two distinct stages in inference with different hotspots. Prefill is compute-bound, while Decode is memory-bound. Recent research has attempted to balance the throughput-latency trade-off using chunked prefills. Though eventually realised that the trade-off will always exist unless we disaggregate prefill and decode. A good placement strategy is needed to reduce communication overhead.

Multi-Node Deployment. In a large-scale industry setting, the deployment plan for LLM has to account for network bandwidth and model instance placement on heterogeneous GPUs. Various formulations, such as Max Flow and Tabu search, can be used to determine the optimal allocation. Lightweight rescheduling has also been implemented to detect shifts in workload.

2. LLM Inference Framework

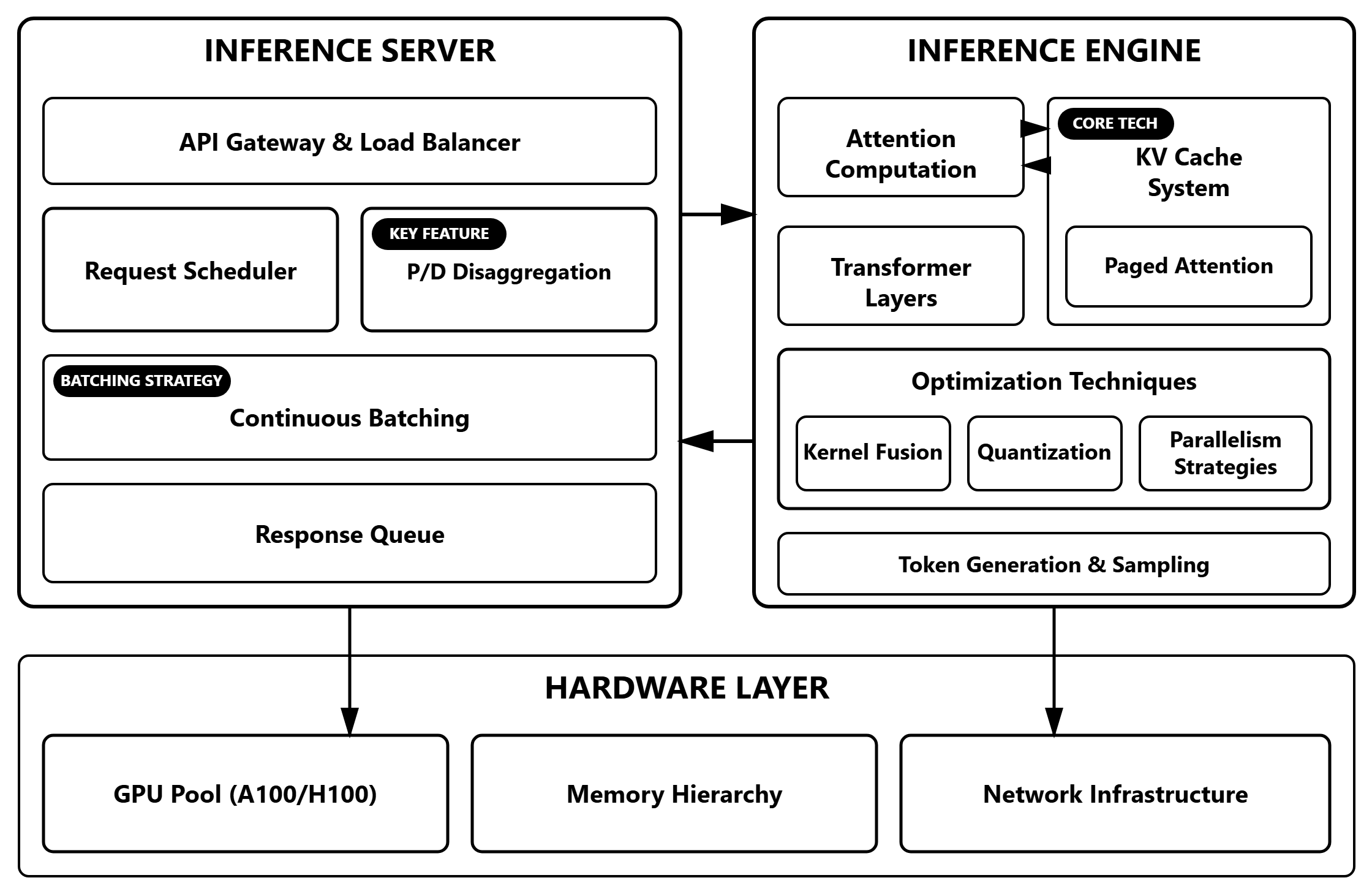

In the LLM inference framework, there are two important concepts: algorithm-level prefill-decode and system-level inference server and engine.

Prefill and decode are two distinct stages in inference, both of which interact extensively with KV Cache. Prefill is the stage where it takes the user’s input as a whole, computes the KV cache, and then predicts the first token, measured by Time-to-first-token (TTFT). Once the KV cache is computed and stored, the next iteration reuses the previously generated KV cache to decode the next token until a sentence reaches its maximum token length, or at which point an End-of-Sequence token is emitted. The throughput is measured as Time-per-output-token (TPOT). As the context size grows, implementing KV cache reduces the computational complexity of generating a new token from O(N²) to O(N), essentially trading memory for speed.

Inference Server and Engine build up the architecture of LLM serving. By segregating the code into server and engine, we expose optimisation opportunities at each level. For the inference server, we receive requests from users, then put them in queues for processing. Additionally, we could have separate queues for prefill and decode, and implement disaggregation to reduce interference, or use continuous batching (iteration-level scheduling) to maximise GPU utilisation when we have inputs of different sequence lengths. In an inference engine, we execute the next word token prediction task. We could implement optimisations such as paged attention and flash attention, or even enable kernel fusion and quantisation to maximise performance.

3. Current Research Trends

3.1 KV Cache Management and Transfer

Previous inference-serving systems, such as Orca [15], suffer from two types of memory fragmentation: internal and external. Internal fragmentation occurs when the system preallocates a contiguous chunk of memory to store the KV cache, adhering to the maximum context size (i.e., 2048 tokens). External fragmentation occurs when small blocks of free space are scattered across the memory, since the preallocated size can vary from request to request.

Inspired by classical virtual memory and paging techniques in operating systems, the vLLM team introduced Page Attention [6], a method that enables KV cache to be stored in non-contiguous physical memory by retaining a block table that maps logical blocks to physical blocks. The method partitions the KV cache into blocks to be stored in physical memory and uses a KV cache manager with a block table to track allocations. As a result, vLLM significantly reduces external fragmentation and leaves only slight internal fragmentation, depending on the block size.

Furthermore, the vLLM team further recognise the need to share the KV cache, as modern decoding algorithms, such as beam search, generate k candidate sequences for a given prompt. To support this, they designed the system so that a physical memory block in vLLM could be referred to by multiple logical memory blocks. Additionally, they added a reference count to a physical memory block and adopted a copy-on-write mechanism. Whenever a given sequence in beam search diverges and needs to update a physical memory block, if the reference count is greater than 1, vLLM will copy the block to another location before modifying it. This approach solved the redundant memory issue, but still performs redundant computation when multiple sequences share the same prefix, as the Paged Attention processes each sequence independently.

To address the redundant computation, Hydragen [5] introduced new efficient attention computation with shared prefixes. The computation was implemented in 2 steps: attention decomposition and inter-sequence batching. Attention decomposition splits the full attention computation across the shared prefixes and suffixes, then combines them later. Inter-sequence batching groups sequences that share the same prefix and efficiently computes the attention by leveraging hardware properties, essentially transforming matrix-vector multiplication into hardware-friendly matrix-matrix multiplication.

Apart from these, when a model is hosted on multiple GPUs, efficient KV cache transfer is also necessary to improve the performance. CacheGen [8] leverages KV cache distributional properties to encode them into compact bitstreams. Thunderserve [4] quantised the KV cache before transferring among GPU instances to reduce communication overhead.

3.2 Prefill-Decode Scheduling and Placement Strategies

Systems such as vLLM [6] and Hydragen [5] work on unified prefill-decode serving. However, this colocation leads to strong prefill-decode interference. A prefill step usually takes much longer than the decode step. When they are batched together, the prefill step might delay the execution of decode steps, increasing TPOT. Similarly, the inclusion of decode steps also contributes to increased TTFT. To address this issue, research such as DeepSpeed-FastGen [3] and Sarathi [1] introduced chunked prefills with piggyback decoding. Essentially, they chunked prefill into blocks and arranged the decoding steps to follow the complete execution of a chunk. This idea alleviates the impact of prefill on TPOT, but it does not eliminate the trade-off.

Splitwise [11], on the other hand, extensively studies the differences in computational and memory access patterns of prefill and decode stages on multiple generations of GPUs. They decided to split these stages across different specialised hardware to improve utilisation and save energy and costs. By disaggregation, developers could place compute-bound prefill stages on high-FLOPS devices, and place memory-bound decode stages on devices optimised for memory bandwidth and KV cache access patterns. Thunderserve [4] also shows that A40 is more cost-effective than 3090Ti in the prefill stage, and vice versa in the decode stage. DistServe [16] further extends this idea of disaggregating the prefill and decode stages by creating separate first-come-first-served queues in the inference server. DistServe also created two different placement algorithms depending on the cross-node bandwidth. In high bandwidth clusters, KV cache transmission rate is negligible, so DistServe can deploy prefill and decode instances without any constraints. If cross-node bandwidth is a limitation, DistServe ensures that the prefill and corresponding decode instances are placed within the same node, and then optimizes the parallelism configuration within that single node.

These disaggregation insights have created another line of research on placement algorithms. As clusters in the cloud and data centres can have heterogeneous GPUs with different interconnects, such as high-bandwidth InfiniBand, NVLink, or the common low-bandwidth PCIe. Based on the hardware configurations, one can formulate the placement algorithm as a linear programming problem [9], with constraints on network bandwidth, memory capacity, compute resources, and latency requirements.

3.3 Multi-node Deployment

Multi-node deployment on a cloud platform provides a scalable, cost-effective environment for LLM inference. However, cloud platforms usually consist of heterogeneous devices and slow interconnects, such as PCIe interconnect instead of NVLink and InfiniBand [4], hence it is crucial to avoid the assumption of a homogeneous environment with high-speed interconnects as it is inaccurate. The deployment plan thereby has to consider two important factors: model placement through the best parallelisation strategies (Tensor and Pipeline Parallelism) and network bandwidth.

Helix [9] attempted to formulate these two factors in LLM inference as a max-flow problem. The max-flow problem is formulated on directed, weighted graphs, where the nodes represent GPU instances, and the edges capture both hardware heterogeneity and network bandwidth. This approach allows Helix to optimise both model placement and request scheduling at the same time. To obtain the maximised serving throughput in the max-flow problem, Helix implemented Mixed Integer Linear Programming (MILP) with constraints to find the model placements. In Helix, we only need to run model placement once, and each request will be assigned its own individual inference pipeline for output computation.

Thunderserve [4] further decomposes the deployment plan into a two-level solution. The upper level determines model placement, and the lower level determines the best parallelism strategies. Thunderserve team also showed that the upper-level problem is essentially a job shop scheduling problem (JSSP) hence they implemented the well-known tabu search to solve this NP-hard problem. Furthermore, a light-weight rescheduling mechanism is also implemented to enable adaptation to workload shifts.

Other lines of research, such as PerLLM [14] and POLCA [10], also explored LLM inference in the cloud. PerLLM integrates the upper confidence bound algorithm to optimise resource scheduling and resource allocation. POLCA investigated power patterns in both training and inference, then attempted to use power capping for power management.

4. Taxonomy and Comparison

| Paper | Main Contribution | KV Cache Management & Memory Optimization | Prefill-Decode Scheduling and Placement Strategies | Multi-Node Deployment |

|---|---|---|---|---|

| vLLM | PagedAttention for KV cache management | Paged attention with virtual memory-style paging | Unified prefill-decode serving with continuous batching | Single-node deployment with homogeneous GPUs |

| Hydragen | Shared-prefix attention optimization | Prefix-sharing KV cache for common prompts | Unified prefill-decode serving | Single-node deployment with homogeneous GPUs |

| DistServe | Prefill-decode disaggregation | Separate KV cache per phase with high-bandwidth transfer between instances | Disaggregated prefill and decode scheduling with separate FCFS queues | Multi-node homogeneous cluster with high-bandwidth interconnects (NVLink, InfiniBand) |

| Helix | Max-flow optimization for heterogeneous clusters | KV cache size estimation with transfer across heterogeneous nodes | Joint optimization of placement and scheduling via max-flow model | Multi-node heterogeneous cluster with network-aware MILP-based placement |

| ThunderServe | Phase splitting with adaptive scheduling | Paged attention, FlashAttention, and KV cache compression for inter-phase communication | Disaggregated prefill and decode assignment using Tabu search | Multi-node deployment optimized for low-bandwidth environments (PCIe) |

5. Future Work

The research presented spans memory management, scheduling, and system-wide deployment. The impact of attention computation on memory management and computational speed has highlighted the importance of model-system co-design. Originally, most models used standard Multi-head attention (MHA) [13]. Subsequently, Multi-query attention (MQA) [12], Grouped-query attention (GQA) [2] and Multi-head latent attention (MLA) [7] were introduced to optimise for both memory and compute. FlashAttention, Paged Attention, and Hydragen were also implemented at the system level and have improved attention performance across various mechanisms. However, future work could investigate different model-system co-designs to fully exploit the efficiency gains of newer attention variants like MQA, GQA, and MLA.

Scheduling strategy in disaggregated prefill and decode has become widespread due to its benefits in reducing interference. However, most optimisation mechanisms use only throughput and latency to find the best scheduling strategies. Future work could investigate incorporating energy efficiency into the optimisation objective, ensuring the deployment of LLM inference is both cost-effective and responsible. More work on the environmental sustainability of LLM inference is also needed to quantify the energy footprint, thereby creating a framework to balance sustainability needs with the demand for high-quality LLMs.

Helix and ThunderServe both recognise the importance of tuning the right parameters for Tensor and Pipeline parallelism, as well as their sensitivity to network bandwidth. Tensor Parallelism partitions a single model layer across multiple GPUs, hence, it requires a high-bandwidth interconnect to perform AllReduce and AllGather efficiently. While Pipeline parallelism partitions multiple layers across multiple GPUs vertically, their bandwidth requirements are not high. This has encouraged network topology-aware research in order to leverage these characteristics. Additionally, models such as Mixture-of-Experts (MoE) activate only a subset of models during inference, thereby introducing Expert Parallelism that requires careful load balancing. This is another interesting line of research worth the effort.

References

-

Amey Agrawal, Ashish Panwar, Jayashree Mohan, Nipun Kwatra, Bhargav S Gulavani, and Ramachandran Ramjee. Sarathi: Efficient LLM inference by piggybacking decodes with chunked prefills. arXiv preprint arXiv:2308.16369, 2023.

-

Joshua Ainslie, James Lee-Thorp, Michiel De Jong, Yury Zemlyanskiy, Federico Lebron, and Sumit Sanghai. GQA: Training generalized multi-query transformer models from multi-head checkpoints. arXiv preprint arXiv:2305.13245, 2023.

-

Connor Holmes, Masahiro Tanaka, Michael Wyatt, Ammar Ahmad Awan, Jeff Rasley, Samyam Rajbhandari, Reza Yazdani Aminabadi, Heyang Qin, Arash Bakhtiari, Lev Kurilenko, et al. DeepSpeed-FastGen: High-throughput text generation for LLMs via MII and DeepSpeed-inference. arXiv preprint arXiv:2401.08671, 2024.

-

Youhe Jiang, Fangcheng Fu, Xiaozhe Yao, Taiyi Wang, Bin Cui, Ana Klimovic, and Eiko Yoneki. ThunderServe: High-performance and cost-efficient LLM serving in cloud environments. arXiv preprint arXiv:2502.09334, 2025.

-

Jordan Juravsky, Bradley Brown, Ryan Ehrlich, Daniel Y Fu, Christopher Ré, and Azalia Mirhoseini. Hydragen: High-throughput LLM inference with shared prefixes. arXiv preprint arXiv:2402.05099, 2024.

-

Woosuk Kwon, Zhuohan Li, Siyuan Zhuang, Ying Sheng, Lianmin Zheng, Cody Hao Yu, Joseph Gonzalez, Hao Zhang, and Ion Stoica. Efficient memory management for large language model serving with PagedAttention. In Proceedings of the 29th Symposium on Operating Systems Principles, pages 611–626, 2023.

-

Aixin Liu, Bei Feng, Bin Wang, Bingxuan Wang, Bo Liu, Chenggang Zhao, Chengqi Deng, Chong Ruan, Damai Dai, Daya Guo, et al. DeepSeek-V2: A strong, economical, and efficient mixture-of-experts language model. arXiv preprint arXiv:2405.04434, 2024.

-

Yuhan Liu, Hanchen Li, Yihua Cheng, Siddhant Ray, Yuyang Huang, Qizheng Zhang, Kuntai Du, Jiayi Yao, Shan Lu, Ganesh Ananthanarayanan, et al. CacheGen: KV cache compression and streaming for fast large language model serving. In Proceedings of the ACM SIGCOMM 2024 Conference, pages 38–56, 2024.

-

Yixuan Mei, Yonghao Zhuang, Xupeng Miao, Juncheng Yang, Zhihao Jia, and Rashmi Vinayak. Helix: Serving large language models over heterogeneous GPUs and network via max-flow. In Proceedings of the 30th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 1, pages 586–602, 2025.

-

Pratyush Patel, Esha Choukse, Chaojie Zhang, Íñigo Goiri, Brijesh Warrier, Nithish Mahalingam, and Ricardo Bianchini. Characterizing power management opportunities for LLMs in the cloud. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 3, pages 207–222, 2024.

-

Pratyush Patel, Esha Choukse, Chaojie Zhang, Aashaka Shah, Íñigo Goiri, Saeed Maleki, and Ricardo Bianchini. Splitwise: Efficient generative LLM inference using phase splitting. In 2024 ACM/IEEE 51st Annual International Symposium on Computer Architecture (ISCA), pages 118–132. IEEE, 2024.

-

Noam Shazeer. Fast transformer decoding: One write-head is all you need. arXiv preprint arXiv:1911.02150, 2019.

-

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. Advances in Neural Information Processing Systems, 30, 2017.

-

Zheming Yang, Yuanhao Yang, Chang Zhao, Qi Guo, Wenkai He, and Wen Ji. PerLLM: Personalized inference scheduling with edge-cloud collaboration for diverse LLM services. arXiv preprint arXiv:2405.14636, 2024.

-

Gyeong-In Yu, Joo Seong Jeong, Geon-Woo Kim, Soojeong Kim, and Byung-Gon Chun. Orca: A distributed serving system for Transformer-based generative models. In 16th USENIX Symposium on Operating Systems Design and Implementation (OSDI 22), pages 521–538, 2022.

-

Yinmin Zhong, Shengyu Liu, Junda Chen, Jianbo Hu, Yibo Zhu, Xuanzhe Liu, Xin Jin, and Hao Zhang. DistServe: Disaggregating prefill and decoding for goodput-optimized large language model serving. In 18th USENIX Symposium on Operating Systems Design and Implementation (OSDI 24), pages 193–210, 2024.